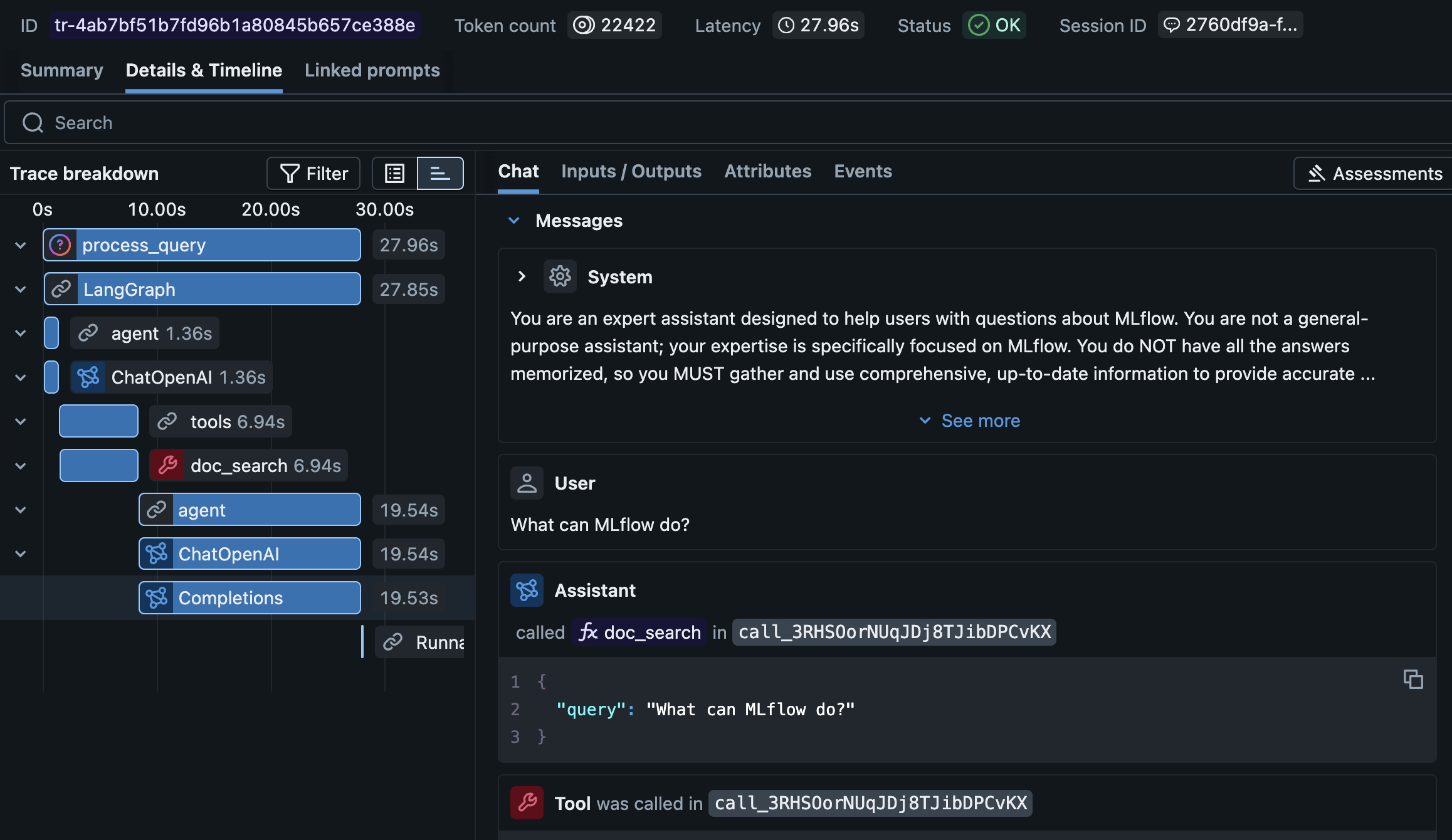

Observability

Capture complete traces of your LLM applications and agents to get deep insights into their behavior. Built on OpenTelemetry and supports any LLM provider and agent framework.

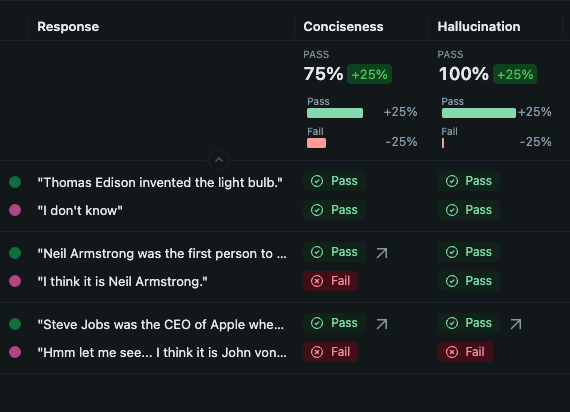

Evaluation

Run systematic evaluations, track quality metrics over time, and catch regressions before they reach production. Choose from 50+ built-in metrics and LLM judges, or define your own with highly flexible APIs.

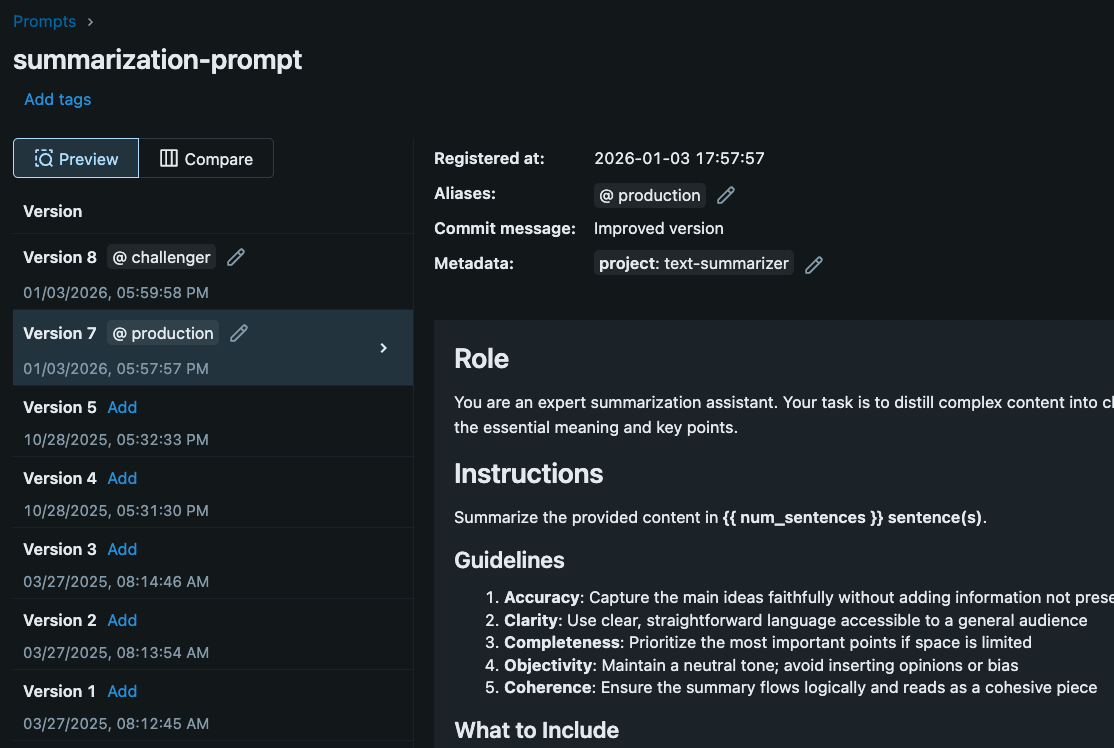

Prompts & Optimization

Version, test, and deploy prompts with full lineage tracking. Automatically optimize prompts with state-of-the-art algorithms to improve performance.

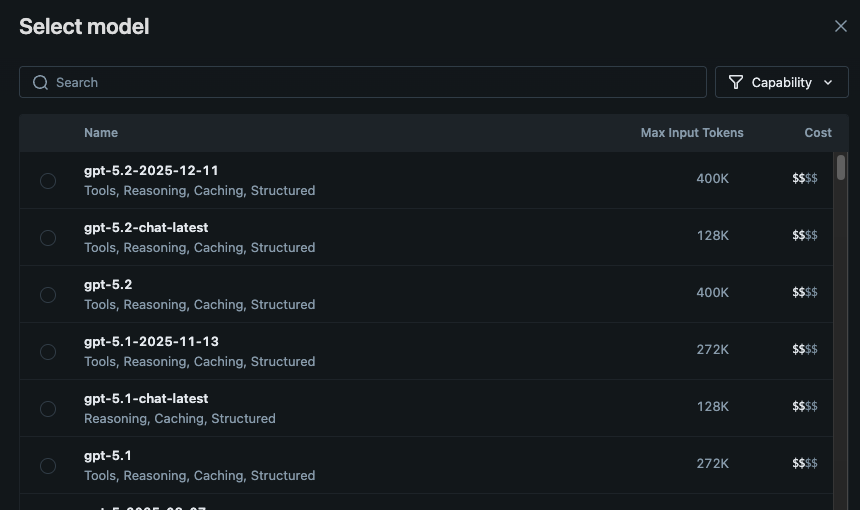

AI Gateway

Unified API gateway for all LLM providers. Route requests, manage rate limits, handle fallbacks, and control costs through a unified OpenAI-compatible interface.

Agent Server

Deploy agents to production with a single command. The MLflow Agent Server provides a FastAPI-based hosting solution with automatic request validation, streaming support, and built-in tracing — so you can go from prototype to production endpoint in minutes.

from mlflow.agent_server import AgentServer, invoke, streamfrom mlflow.types.agent import ResponsesAgentRequest, ResponsesAgentResponse@invoke()async def run_agent(request: ResponsesAgentRequest) -> ResponsesAgentResponse:msgs = [i.model_dump() for i in request.input]result = await Runner.run(agent, msgs)return ResponsesAgentResponse(output=[item.to_input_item() for item in result.new_items])# Start the serveragent_server = AgentServer("MyAgent")agent_server.run(app_import_string="server:app")

Open Source

100% open source under Apache 2.0 license. Forever free, no strings attached.

No Vendor Lock-in

Works with any cloud, framework, or tool you use. Switch vendors anytime.

Production Ready

Battle-tested at scale by Fortune 500 companies and thousands of teams.

Full Visibility

Complete tracking and observability for all your AI applications and agents.

Community

20K+ GitHub stars, 900+ contributors. Join the fastest-growing AIOps community.

Integrations

Works out of the box with LangChain, OpenAI, PyTorch, and 100+ AI frameworks.

Start MLflow Server

One command to get started. Docker setup is also available.

Enable Logging

Add minimal code to start capturing traces, metrics, and parameters

Run your code

Run your code as usual. Explore traces and metrics in the MLflow UI.

MLflow is the largest open source AI engineering platform. MLflow enables teams of all sizes to debug, evaluate, monitor, and optimize production-quality AI agents, LLM applications, and ML models while controlling costs and managing access to models and data. With over 30 million monthly downloads, thousands of organizations rely on MLflow each day to ship AI to production with confidence.

MLflow's comprehensive feature set for agents and LLM applications includes production-grade observability, evaluation, prompt management, an AI Gateway for managing costs and model access, and more. Learn more at MLflow for LLMs and Agents.

For machine learning (ML) model development, MLflow provides experiment tracking, model evaluation capabilities, a production model registry, and model deployment tools.