Tracing Ollama

MLflow Tracing provides automatic tracing capability for Ollama models through the OpenAI SDK integration. Because Ollama exposes an OpenAI-compatible API, you can simply use mlflow.openai.autolog() to trace Ollama calls.

MLflow trace automatically captures the following information about Ollama calls:

- Prompts and completion responses

- Latencies

- Token usage

- Model name

- Additional metadata such as

temperature,max_tokens, if specified. - Function calling if returned in the response

- Any exception if raised

- and more...

Getting Started

Install dependencies

- Python

- JS / TS

pip install 'mlflow[genai]' openai

npm install mlflow-openai openai

Start MLflow server

- Local (pip)

- Local (docker)

If you have a local Python environment >= 3.10, you can start the MLflow server locally using the mlflow CLI command.

mlflow server

MLflow also provides a Docker Compose file to start a local MLflow server with a postgres database and a minio server.

git clone --depth 1 --filter=blob:none --sparse https://github.com/mlflow/mlflow.git

cd mlflow

git sparse-checkout set docker-compose

cd docker-compose

cp .env.dev.example .env

docker compose up -d

Refer to the instruction for more details, e.g., overriding the default environment variables.

Run Ollama server

Ensure your Ollama server is running and the model you want to use is pulled.

ollama run llama3.2:1b

Enable tracing and call Ollama

- Python

- JS / TS

import mlflow

from openai import OpenAI

# Enable auto-tracing for OpenAI (works with Ollama)

mlflow.openai.autolog()

# Optional: Set a tracking URI and an experiment

mlflow.set_tracking_uri("http://localhost:5000")

mlflow.set_experiment("Ollama")

# Initialize the OpenAI client with Ollama API endpoint

client = OpenAI(

base_url="http://localhost:11434/v1",

api_key="dummy",

)

response = client.chat.completions.create(

model="llama3.2:1b",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Why is the sky blue?"},

],

temperature=0.1,

max_tokens=100,

)

import { OpenAI } from "openai";

import { tracedOpenAI } from "mlflow-openai";

// Wrap the OpenAI client and point to Ollama endpoint

const client = tracedOpenAI(

new OpenAI({

baseURL: "http://localhost:11434/v1",

apiKey: "dummy",

})

);

const response = await client.chat.completions.create({

model: "llama3.2:1b",

messages: [

{ role: "system", content: "You are a helpful assistant." },

{ role: "user", content: "Why is the sky blue?" },

],

temperature: 0.1,

max_tokens: 100,

});

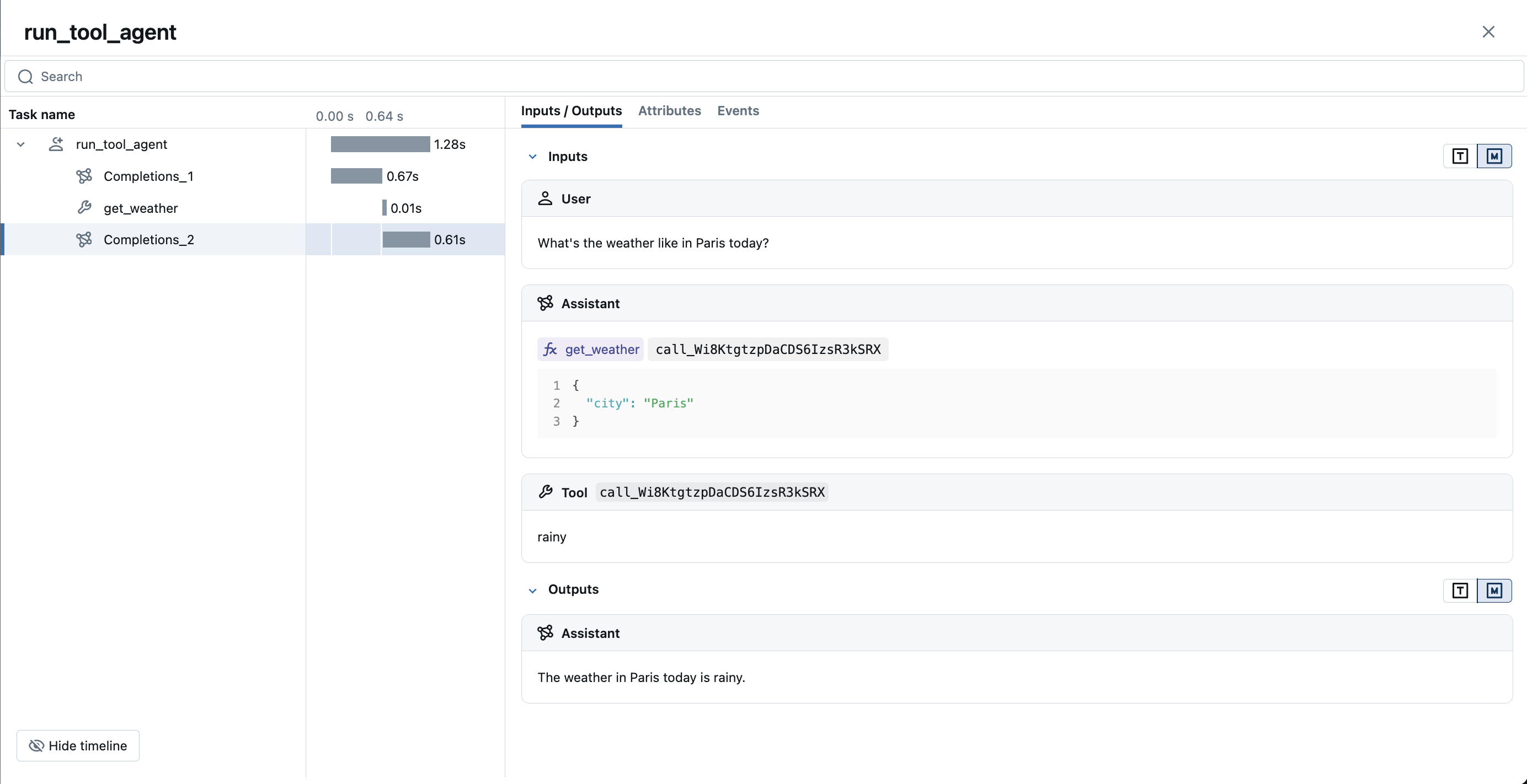

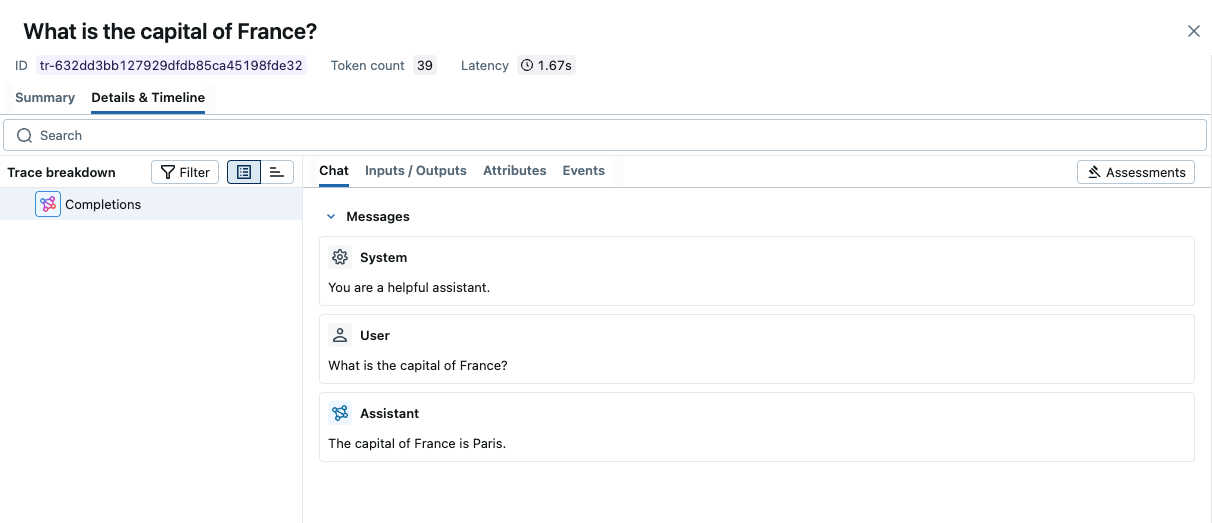

View traces in MLflow UI

Browse to your MLflow UI (for example, http://localhost:5000) and open the Ollama experiment to see traces for the calls above.

→ View Next Steps for learning about more MLflow features like user feedback tracking, prompt management, and evaluation.

Supported APIs

MLflow supports automatic tracing for the following Ollama APIs through the OpenAI integration:

| Chat Completion | Function Calling | Streaming | Async |

|---|---|---|---|

| ✅ | ✅ | ✅ (*1) | ✅ (*2) |

(*1) Streaming support requires MLflow 2.15.0 or later. (*2) Async support requires MLflow 2.21.0 or later.

To request support for additional APIs, please open a feature request on GitHub.

Streaming and Async Support

MLflow supports tracing for streaming and async Ollama APIs. Visit the OpenAI Tracing documentation for example code snippets for tracing streaming and async calls through OpenAI SDK.

Combine with Manual Tracing

To control the tracing behavior more precisely, MLflow provides Manual Tracing SDK to create spans for your custom code. Manual tracing can be used in conjunction with auto-tracing to create a custom trace while keeping the auto-tracing convenience. For more details, please refer to the Combine with Manual Tracing section in the OpenAI Tracing documentation.

Tracking Token Usage and Cost

MLflow automatically tracks token usage and cost for Ollama models through the OpenAI SDK integration. The token usage for each LLM call will be logged in each Trace/Span and the aggregated cost and time trend are displayed in the built-in dashboard. See the Token Usage and Cost Tracking documentation for details on accessing this information programmatically.

Cost may not be available for many local Ollama models as pricing data is not available.

Disable auto-tracing

Auto tracing for Ollama (through OpenAI SDK) can be disabled globally by calling mlflow.openai.autolog(disable=True) or mlflow.autolog(disable=True).

Next steps

Track User Feedback

Record user feedback on traces for tracking user satisfaction.

Manage Prompts

Learn how to manage prompts with MLflow's prompt registry.

Evaluate Traces

Evaluate traces with LLM judges to understand and improve your AI application's behavior.